The Research That Changed Everything

Part 2: How Google Gave AI to the World (2012-2024).

ChatGPT runs on Google research. So does Claude. So does Gemini. So does nearly every AI tool you've used in the past two years.

The technology powering the current AI revolution — the Transformer architecture — came from a Google research paper published in 2017. But Google didn't keep it proprietary. They published it openly, shared the code, and let competitors build on it.

This wasn't an accident. It was strategy.

Here are seven research breakthroughs that show how Google didn't just build AI for themselves — they gave the foundational tools to the world.

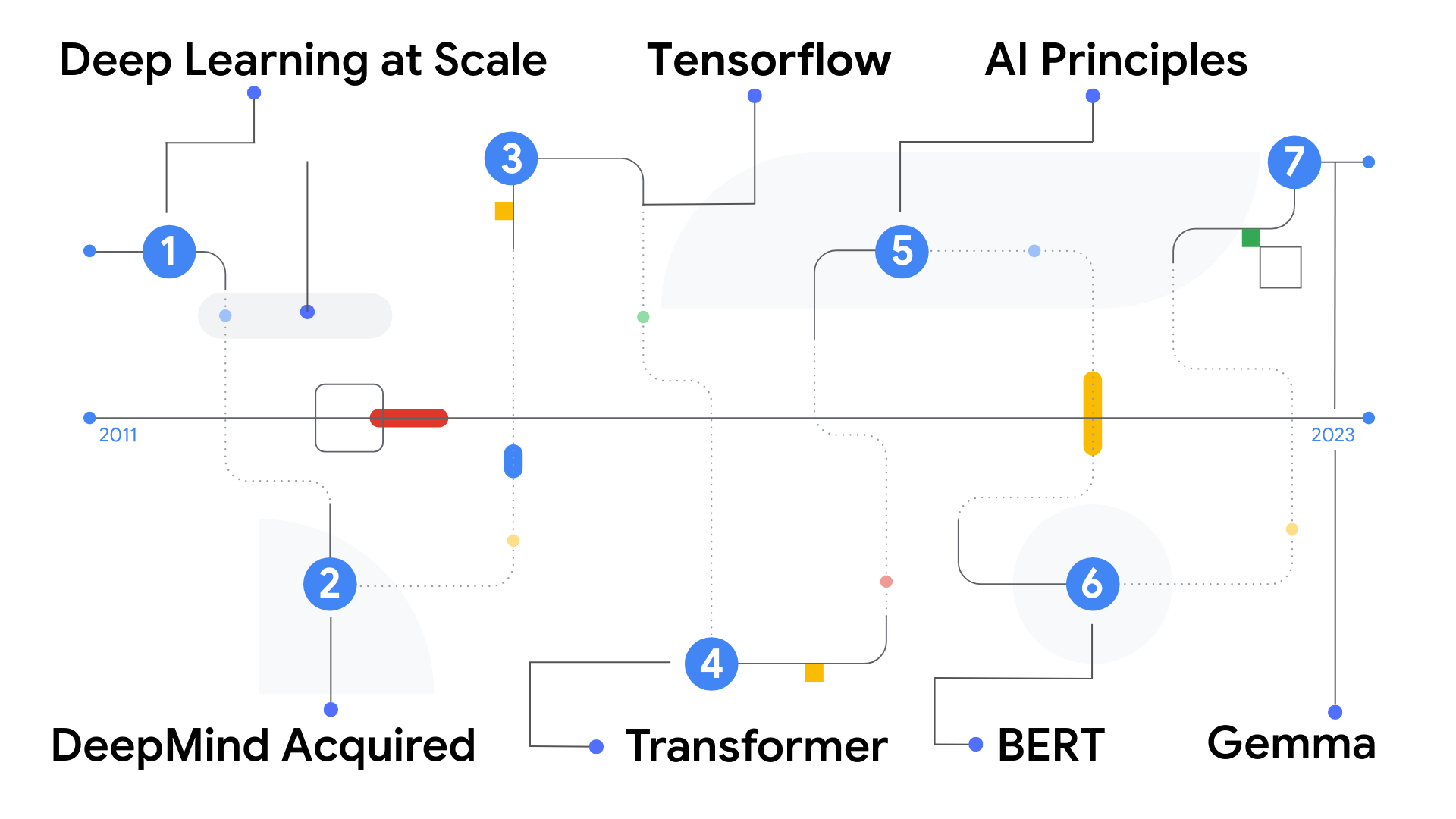

2012: The Year Deep Learning Proved Itself

The Cat Experiment: Unsupervised Learning at Scale. In 2012, the Google Brain team proved something remarkable: neural networks could learn without being told what to look for.

Their famous experiment involved showing a neural network 10 million YouTube video thumbnails. Without any labels or instructions, the system learned to recognize cats. Not because anyone told it what a cat looked like, but because the pattern appeared so frequently that the network identified it on its own.

This was unsupervised learning at scale. It demonstrated that with enough data and computing power, machines could discover patterns humans never explicitly programmed.

The implications were enormous. This wasn't just about cats, it was proof that deep learning could work on real-world, messy, unlabeled data.

Speech Recognition: Deep Learning Enters Production. That same year, deep learning made its first major leap into products people used daily. A landmark collaboration between four research groups, Google, Microsoft, IBM, and the University of Toronto, published research showing that deep neural networks dramatically outperformed the speech recognition systems that had dominated for over 30 years.

The previous standard, Gaussian Mixture Models (GMMs), had reached a plateau. Deep neural networks shattered it. In August 2012, Google announced that the new approach reduced error rates by over 20%. The same month, with the launch of Android Jelly Bean, neural network-based speech recognition went live in Google Voice Search.

Behind the scenes, LSTM (Long Short-Term Memory) neural networks had launched in Android's speech recognizer in May 2012, networks that could "remember" context from earlier in a sentence, dramatically improving accuracy. This wasn't a research demo, it was Google Voice Search getting measurably better for hundreds of millions of users.

Together, these 2012 breakthroughs proved deep learning's dual promise: it could discover patterns autonomously (the cat experiment), and it could immediately improve products at scale (speech recognition). The AI era had a new foundation.

2014: DeepMind Joins Google

When Google acquired DeepMind in 2014, they weren't buying a product. They were buying a vision.

DeepMind's mission was ambitious: solve intelligence, then use it to solve everything else. Their approach combined deep learning with reinforcement learning — systems that learn by trial and error, the way humans learn to walk or play games.

Two years later, DeepMind's AlphaGo defeated the world champion at Go — a game so complex that brute-force computing couldn't solve it. The system had to develop intuition.

This wasn't just a publicity stunt. It proved that AI could master domains requiring creativity and strategic thinking, not just pattern recognition.

2015: TensorFlow Goes Open Source

Here's where strategy gets interesting.

In November 2015, Google released TensorFlow — their internal machine learning framework — as open-source software. Anyone could download it, use it, modify it, build on it.

Why give away your competitive advantage?

Because when everyone builds on your framework, you shape the ecosystem. Researchers worldwide started publishing papers using TensorFlow. Students learned AI on TensorFlow. Startups built products on TensorFlow. The framework became the common language of machine learning.

Today, TensorFlow has been downloaded over 170 million times. It's used in healthcare diagnostics, climate modeling, financial analysis, and thousands of applications Google will never build themselves.

The lesson: sometimes the best way to win is to make everyone else successful on your platform.

2017: "Attention Is All You Need"

If there's one research paper that defines the current AI era, it's this one.

In June 2017, Google researchers published "Attention Is All You Need" — introducing the Transformer architecture. The paper's title was almost playfully confident. It was also accurate.

Before Transformers, AI systems processed language sequentially — one word at a time, like reading a sentence left to right. Transformers introduced "attention mechanisms" that let models consider all words simultaneously, understanding context and relationships across entire passages.

This architecture became the foundation for:

- GPT (OpenAI's ChatGPT)

- Claude (Anthropic)

- Gemini (Google)

- LLaMA (Meta)

- Virtually every large language model in existence

Google published the research openly. They didn't patent the architecture or restrict its use. The result: competitors built billion-dollar products on Google's innovation.

Was this a mistake? Or was it a bet that advancing the entire field would ultimately benefit Google more than hoarding the research?

2018: AI Principles

As AI capabilities grew, so did concerns about misuse. In 2018, Google published their AI Principles, a public commitment to developing AI responsibly.

The principles include commitments to:

- Be socially beneficial.

- Avoid creating or reinforcing unfair bias.

- Be built and tested for safety.

- Be accountable to people.

- Incorporate privacy design principles.

This wasn't just PR. Google explicitly listed applications they won't pursue, including weapons, surveillance that violates international norms, and technologies that cause overall harm.

Publishing these principles publicly created accountability. It also influenced the broader industry conversation about AI ethics, pushing competitors to articulate their own standards.

2018: BERT Transforms Search

The same year, Google introduced BERT (Bidirectional Encoder Representations from Transformers), and Search got dramatically smarter.

Previous search algorithms struggled with prepositions and context. The query "can you get medicine for someone pharmacy" was ambiguous — is someone picking up a prescription for another person, or asking about pharmacy policies?

BERT understood the nuance. It could read queries the way humans do, considering the full context, not just keywords.

Google called it the biggest improvement to Search in five years. And once again, they open-sourced the model, letting researchers and competitors build on it.

2024: Gemma — Open Models for Everyone

The pattern continues.

In February 2024, Google released Gemma, a family of lightweight, open models built from the same research that powers Gemini. These aren't watered-down versions. They're state-of-the-art models designed to run on laptops, phones, and edge devices.

Gemma represents a shift in how AI gets deployed. Instead of everything running in massive data centers, capable AI can now run locally, more private, faster, and accessible to developers without cloud budgets.

By releasing Gemma openly, Google is betting on the same strategy that worked with TensorFlow: shape the ecosystem, build the tools everyone else uses, and benefit from the innovation that follows.

The Pattern Worth Noticing

Looking at these seven milestones, Google's research strategy becomes clear:

2012-2014: Prove what's possible (Deep Learning, DeepMind) 2015-2018: Give away the tools (TensorFlow, Transformers, BERT) 2018-2024: Shape the standards (AI Principles, Gemma)

This is counterintuitive. Traditional business logic says protect your advantages. But in AI research, openness accelerated progress faster than any single company could achieve alone.

The Transformer architecture Google published in 2017 has generated more innovation in seven years than decades of proprietary AI research. Some of that innovation came back to Google. Much of it didn't. But the field moved forward.

What This Means for You

You don't need to publish research papers to apply this lesson.

The principle is simple: sometimes sharing your best work creates more value than protecting it.

Open-sourcing tools builds ecosystems. Publishing insights builds authority. Teaching what you know attracts people who want to learn.

Google's AI dominance didn't come from hoarding secrets. It came from making their research the foundation everyone else built on.

That's a strategy worth considering, whatever field you're in.

ICYMI: This article covered the research breakthroughs that made modern AI possible. But how did these innovations actually reach the products we use every day? In Part 1, I walk through Google's AI product evolution, from spell-check and spam filtering to the launch of Gemini. Read it here, or the full series here.