The AI You Already Use.

Part 1: Google's AI Timeline (2001-2023).

You've been using AI for over two decades. Not the chatbot kind that dominates headlines today. The invisible kind, correcting your typos, translating menus on vacation, surfacing the right search result before you finish typing.

Google didn't become an AI company when ChatGPT launched. They've been building AI into products since 2001. The recent explosion of generative AI isn't a pivot, it's the culmination of a 23-year bet.

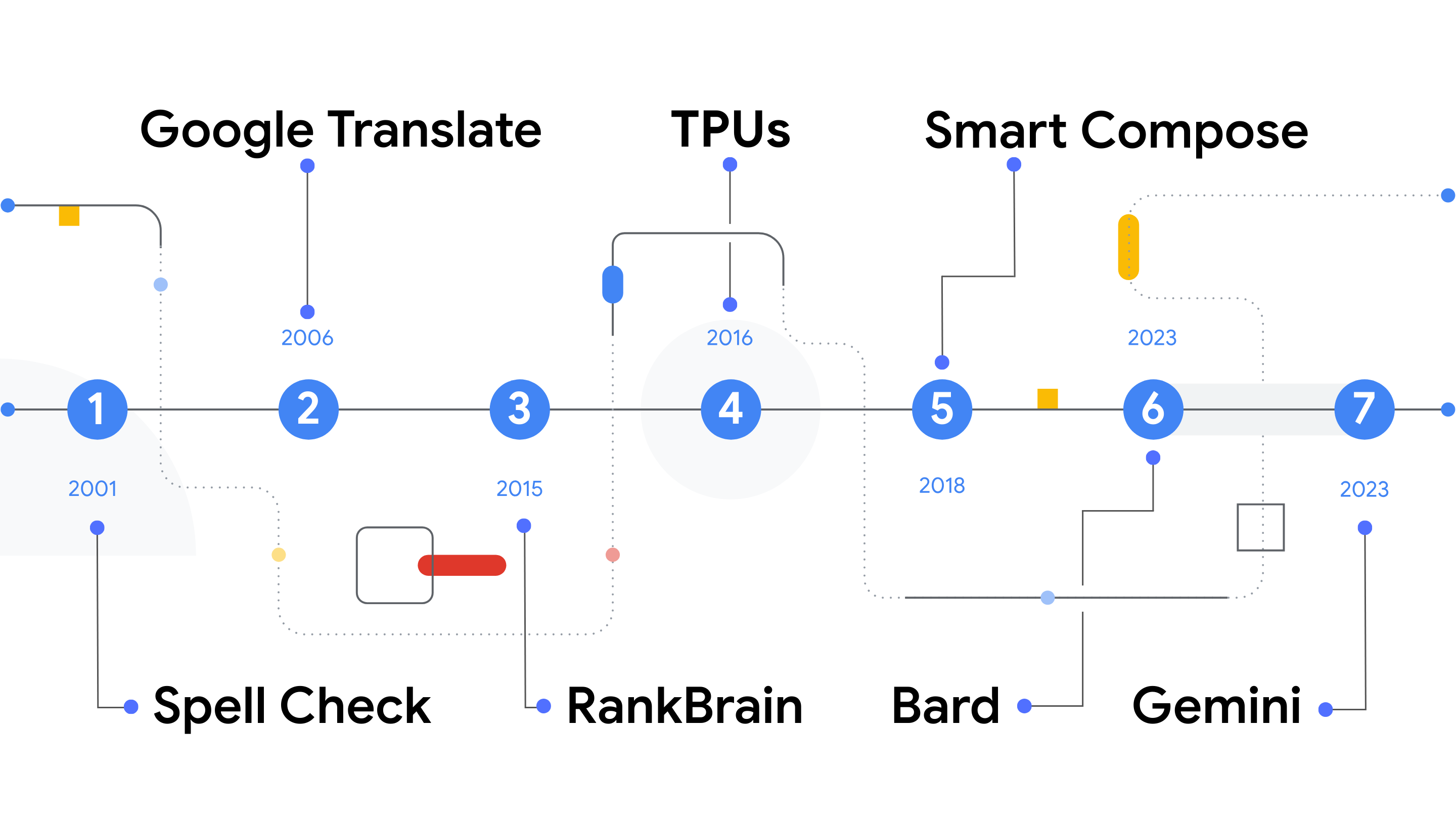

Here are seven milestones that show how AI quietly became part of your daily workflow.

2001: Machine Learning for Spell Check

Google's AI journey started with something you probably take for granted: spell check.

In 2001, Google began using machine learning to power spell correction at scale in Search. When you type "recieve" and Google suggests "receive," that's not a simple dictionary lookup. It's a system learning from billions of queries to understand what you meant to type.

This was Google's first signal: AI works best when it disappears into the product.

2006: Google Translate

Five years later, Google launched Google Translate, and machine learning made the impossible feel routine.

Before this, translation software was clunky and unreliable. Google's approach was different: instead of programming grammar rules for every language, they trained models on massive datasets of translated text. The system learned patterns rather than rules.

Today, Google Translate handles 100+ languages and processes over 100 billion words daily. Most users don't think of it as "AI." They just think it works.

2015: RankBrain in Search

By 2015, Google faced a problem: 15% of daily searches had never been seen before. Traditional keyword matching couldn't handle queries it had never encountered.

RankBrain, a machine learning system that understands the intent behind a search, not just the words was introduced. When you search "what's the title of the consumer at the highest level of a food chain," RankBrain understands you mean "apex predator" even though those words never appeared in your query.

This was AI moving from assistance to understanding.

2016: Tensor Processing Units (TPUs)

Here's a milestone most people missed: Google announced the Tensor Processing Units (TPUs) in 2016, custom silicon designed specifically for machine learning.

Why does hardware matter? Because AI models are only as powerful as the infrastructure running them. TPUs gave Google a computational advantage that competitors couldn't easily replicate. Every Google AI product, from Search to Photos to Gemini, runs on this foundation.

The lesson: breakthrough products often depend on invisible infrastructure investments made years earlier.

2018: Smart Compose in Gmail

If you've ever watched Gmail finish your sentence, you've experienced Smart Compose.

Launched in 2018, Smart Compose uses AI to predict what you're about to type and offers to complete it. "Looking forward to..." becomes "Looking forward to hearing from you." It learns from your writing patterns and adapts to your style over time.

This was AI becoming personal, not just understanding language generally, but understanding your language specifically.

2023: Bard

When ChatGPT launched in late 2022, the world suddenly paid attention to conversational AI. Google responded in early 2023 with Bard, their first public experiment in generative AI.

Bard was positioned as a collaborator, not just an answer engine. You could brainstorm ideas, draft content, explore topics through dialogue. It was imperfect and clearly experimental, but it marked a shift: AI was no longer invisible. It was the product itself.

2023: Gemini

Later that year, Google introduced Gemini, and the architecture changed entirely.

Gemini is multimodal, meaning it understands text, images, audio, and video natively. Previous models processed different formats separately, then combined results. Gemini was trained on all formats together from the start.

The result: an AI that can watch a video and answer questions about it, analyze charts and graphs directly, or understand a photo you took and explain what you're looking at.

With Gemini 1.5 and beyond, Google expanded context windows to handle entire books, codebases, and hour-long videos in a single prompt. Gemini Advanced brought these capabilities to consumers, while the model family now powers features across Search, Gmail, Docs, and Workspace.

This isn't iteration. It's a new foundation.

The Pattern Worth Noticing

Looking at these seven milestones, a pattern emerges:

2001-2015: AI as invisible enhancement (spell check, translate, search ranking). 2016-2018: AI as infrastructure and personalization (TPUs, Smart Compose). 2023: AI as the interface itself (Bard, Gemini).

Each phase built on the previous one. The 2023 breakthroughs weren't possible without two decades of accumulated research, infrastructure, and product integration.

This is what long-term compounding looks like in technology.

What This Means for You

You don't need to understand transformer architectures or tensor processing to benefit from AI. You already have been, for years.

But understanding where these tools came from helps you see where they're going. The companies investing in AI infrastructure today are building the products you'll use in 2030.

The question isn't whether AI will be part of your workflow. It's whether you'll use it intentionally or just let it happen to you.

NEXT: Part 2: The Research That Changed Everything and How Google Gave AI to the World. In this piece, we'll explore the seven research breakthroughs, including the 2017 paper that powers ChatGPT, Claude, and Gemini, and why Google chose to share them openly.

If you found this useful, subscribe to get Part 2 and future articles on productivity, technology, and intentional work.